Table of Contents

Overview

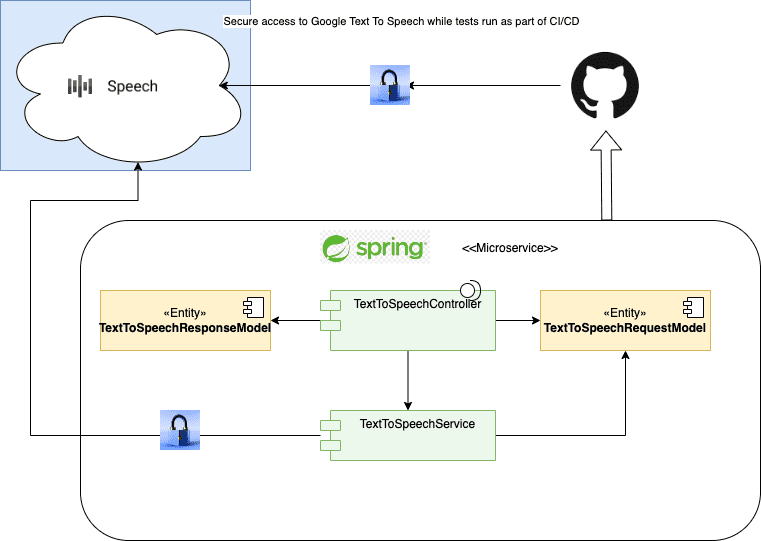

In this article I will share how I wrote a Spring Boot Microservice which uses Google Text-To-Speech service to convert text into an mp3 audio file.

Setup required to follow the steps in this article

Enabling GitHub Actions

All free and paid accounts have GitHub Actions enabled by default. GitHub provides, at the time of writing, 2,000 free minutes of GitHub Actions, which should give you plenty of minutes to run the builds described in this article.

To check your setup, head to your GitHub profile and choose Settings. On the left, you should see a Menu item called Actions. Here it’s how it looks for me:

Google Cloud setup

To use Google’s services you will need the following:

- An account

- A project

- the gcloud command line

While you should be able to setup a Google account and a project, you will need to install the gcloud command for your shell. Thankfully, this is very easy. Just follow the instructions on this page.

Please make sure you initialise your Google environment as described in the above link.

Setting up Google Cloud Identity Federation

The Spring Boot Microservice

https://start.spring.ioIf you are not familiar with how to get started with a Spring Boot Microservice, you have several options:

- You can Google “Spring initialiser” and head off to Spring Initializr.

- You can use an IDE like IntelliJ or VSC which offer support for Spring Boot (look at the plugins)

- You can fork my code and work on your copy

Once you have the code available on your PC, open it using your IDE of choice.

Writing the tests first

Following Clean Code best practices, we should start by writing the tests. The general architecture of the system will be something like this:

The Microservice is really simple. The bulk of it resides in the TextToSpeechService which receives a Request Model and returns a Response Model. So let’s see how to write an integration test for this service.

The Service Integration Test

package io.techwings.media.texttospeech.integration;

import com.google.protobuf.ByteString;

import io.techwings.media.texttospeech.app.model.TextToSpeechRequestModel;

import io.techwings.media.texttospeech.app.services.TextToSpeechService;

import io.techwings.media.texttospeech.main.TextToSpeechApplication;

import org.junit.jupiter.api.Assertions;

import org.junit.jupiter.api.BeforeEach;

import org.junit.jupiter.api.Test;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.test.context.SpringBootTest;

import java.io.BufferedReader;

import java.io.FileReader;

import java.nio.file.Files;

import java.nio.file.Paths;

import java.util.stream.Collectors;

@SpringBootTest(classes = TextToSpeechApplication.class)

class TextToSpeechServiceTests {

private String outputPath;

private String buffer;

@Autowired

TextToSpeechService textToSpeechService;

@Value("${user.home}")

private String userHome;

private TextToSpeechRequestModel model;

@BeforeEach

public void setup() throws Exception {

Assertions.assertNotNull(userHome);

try(BufferedReader reader =

new BufferedReader(new FileReader("src/test/resources/chapter1.txt"))) {

buffer = reader.lines().collect(Collectors.joining());

}

outputPath = Paths.get(userHome, "tmp").resolve("output.mp3").toString();

model = this.createTestModel();

}

@Test

void testTextToSpeech_mustGenerateOutputFile() throws Exception {

convertTextToAudioAndAssessResults();

}

@Test

void testTextToSpeechWithNullOutputPath_mustStoreToDefaultLocation() throws Exception {

model.setOutputPath(null);

convertTextToAudioAndAssessResults();

}

@Test

void testTextToSpeechWithEmptyOutputPath_mustStoreToDefaultLocation() throws Exception {

model.setOutputPath(null);

convertTextToAudioAndAssessResults();

}

@Test

void testTextToSpeechWithEmptyInput_mustStoreOutputFile() throws Exception {

model.setInputText(null);

convertTextToAudioAndAssessResults();

}

private void convertTextToAudioAndAssessResults() throws Exception {

ByteString audioContents = retrieveAudioContent(model);

textToSpeechService.writeOutputAudioFile(audioContents, outputPath);

Assertions.assertTrue(Files.exists(Paths.get(outputPath)));

}

private ByteString retrieveAudioContent(TextToSpeechRequestModel model) throws Exception {

ByteString audioContents = textToSpeechService.synthesizeText(model);

Assertions.assertNotNull(audioContents);

return audioContents;

}

private TextToSpeechRequestModel createTestModel() {

model = new TextToSpeechRequestModel();

model.setInputText(buffer);

model.setOutputPath(outputPath);

return model;

}

}

With this test we want to demonstrate that:

- Given a text input and an output path, Google Text-To-Speech will produce an mp3 file at the specified location

- Given a text and no output path, the mp3 file will be saved to a default location (I chose /tmp/output.mp3 as usually this is writable

- Given no text and an output path, an empty mp3 will be saved at the specified location

The TextToSpeechRequestModel

package io.techwings.media.texttospeech.app.model;

import com.fasterxml.jackson.annotation.JsonProperty;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

@Data

@NoArgsConstructor

@AllArgsConstructor

public class TextToSpeechRequestModel {

@JsonProperty(value = "input", required = true)

private String inputText;

@JsonProperty(value = "output_path")

private String outputPath;

}

Please note the Lombok annotations at the top of the class. These allow us to use getters and setters for the properties as well as they provide a no-arg and full-arg constructor for Spring to use.

Please also note the Jackson @JsonProperty annotations on the fields. These indicate the name of the key we want to map from the JSON file. Spring Boot will use these annotations to automatically bind requests made to the Spring Boot Controller (which will send a JSON payload) to the TextToSpeechRequestModel. We will see this later when talking about the Controller.

The integration test creates a default TextToSpeechRequestModel before each test. The logic of each test is pretty much the same:

- Create a test Request Model

- Invoke the Service that invokes Google Text-To-Speech to convert to audio

- Verify that the MP3 output file has been created

The TextToSpeechService

This is the main component in the architecture. It receives a TextToSpeechRequestModel, it invokes Google Text-To-Speech, it saves the returned MP3 file to the desired output location.

package io.techwings.media.texttospeech.app.services;

import com.google.cloud.texttospeech.v1.*;

import com.google.protobuf.ByteString;

import io.techwings.media.texttospeech.app.model.TextToSpeechRequestModel;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.stereotype.Service;

import org.springframework.util.StringUtils;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStream;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

@Service

public class TextToSpeechService {

@Value("${user.home}")

private String userHome;

/**

* Demonstrates using the Text to Speech client to synthesize text or ssml.

*

* @param model The Model for the Request to GCP Text-To-Speech. (e.g., "Hello there!")

* @throws Exception on TextToSpeechClient Errors.

*/

public ByteString synthesizeText(TextToSpeechRequestModel model) throws Exception {

this.validateAndNormaliseModel(model);

// Instantiates a client

try (TextToSpeechClient textToSpeechClient = TextToSpeechClient.create()) {

SynthesisInput input = prepareSynthesisInput(model);

// Build the voice request

VoiceSelectionParams voice = setVoiceSelectionParams();

// Select the type of audio file you want returned

AudioConfig audioConfig = setAudioConfig();

// Perform the text-to-speech request

SynthesizeSpeechResponse response =

textToSpeechClient.synthesizeSpeech(input, voice, audioConfig);

return response.getAudioContent();

}

}

public void writeOutputAudioFile(ByteString audioContents,

String outputFilePath) throws IOException {

Path path = Paths.get(outputFilePath);

Path directoryExtract = path.getParent();

if (!Files.exists(directoryExtract))

Files.createDirectories(directoryExtract);

if (Files.exists(path))

Files.delete(path);

try (OutputStream out = new FileOutputStream(outputFilePath)) {

out.write(audioContents.toByteArray());

}

}

private SynthesisInput prepareSynthesisInput(TextToSpeechRequestModel model) {

// Set the text input to be synthesized

String inputText = model.getInputText();

return SynthesisInput.newBuilder()

.setText(inputText).build();

}

private AudioConfig setAudioConfig() {

return AudioConfig.newBuilder()

.setAudioEncoding(AudioEncoding.MP3) // MP3 audio.

.build();

}

private static VoiceSelectionParams setVoiceSelectionParams() {

return VoiceSelectionParams.newBuilder()

.setLanguageCode("en-GB") // languageCode = "en_us"

.setName("en-GB-Neural2-A")

.build();

}

private void validateAndNormaliseModel(TextToSpeechRequestModel model) {

prepareOutputPath(model);

normaliseInputTextAndEnsureDefaultIsNotNull(model);

}

private void prepareOutputPath(TextToSpeechRequestModel model) {

String outputPath = StringUtils.hasText(model.getOutputPath()) ? model.getOutputPath() :

this.defaultPath();

model.setOutputPath(outputPath);

}

private String defaultPath() {

return Paths.get(userHome, "tmp", "output.mp3").toString();

}

private static void normaliseInputTextAndEnsureDefaultIsNotNull(TextToSpeechRequestModel model) {

if (!StringUtils.hasText(model.getInputText()))

model.setInputText("");

}

}

The Service is pretty simple. It relies on the Google libraries (which in turn use Google APIs). The Service receives a Request model as input, containing the input text to convert to audio and the desired output path), it validates the model (resolving to defaults when either values are null or empty), it configures the Google Text-To-Speech service (e.g. by setting the language, the preferred voice, etc) and then it invokes the synthesizeSpeech method which does the magic. The second method offered by this service write the audio content to a file at a specified location. I decided to keep the two methods separate as different consumers might have different needs. A client might, for example, take the audio content and offer it in the response for clients to save where they please.

At this point it pays spending a couple of minutes on security, specifically how to setup your local environment so that calls to the Google libraries are authenticated.

I won’t be entering all details, as there’s a Google resource for it. In short, in order for this code to work properly, and provided you have setup a Google project and enabled the correct APIs, the Google libraries need to authenticate you with your Google project. There is an easy way to achieve this from a local environment (replace $PROJECT_ID with your Google project id). The –billing-project option is only required if you have multiple projects. This will create a credentials file in a default location that Google libraries will look at to authenticate requests. Running this command will open the browser asking you to authenticate with Google cloud and once that happens, the file will be generated.

gcloud auth application-default login --billing-project "${PROJECT-ID}"

The TextToSpeechController

The Controller is very easy. It’s a passthrough between HTTP clients and the TextToSpeechService.

The TextToSpeechController Integration Test

As usual, let’s start with the integration test.

package io.techwings.media.texttospeech.integration;

import com.fasterxml.jackson.databind.ObjectMapper;

import io.techwings.media.texttospeech.app.model.TextToSpeechRequestModel;

import io.techwings.media.texttospeech.app.model.TextToSpeechResponseModel;

import io.techwings.media.texttospeech.main.TextToSpeechApplication;

import io.techwings.media.texttospeech.view.TextToSpeechController;

import org.junit.jupiter.api.Assertions;

import org.junit.jupiter.api.BeforeEach;

import org.junit.jupiter.api.Test;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.http.HttpStatus;

import java.io.File;

import java.io.IOException;

import java.nio.file.Path;

import java.nio.file.Paths;

@SpringBootTest(classes = TextToSpeechApplication.class)

public class TextToSpeechControllerTest {

@Autowired

private TextToSpeechController controller;

private ObjectMapper mapper;

@BeforeEach

public void setup() {

mapper = new ObjectMapper();

mapper.writerWithDefaultPrettyPrinter();

}

@Test

public void validRequest_mustGenerateOutputFile() throws Exception {

TextToSpeechRequestModel model = this.retrieveRequestModel();

TextToSpeechResponseModel responseModel = controller.convertTextToAudio(model);

this.validateResponseModel(responseModel);

this.validateJsonResponse(responseModel);

}

private void validateJsonResponse(TextToSpeechResponseModel responseModel) throws Exception {

String responseJsonPath =

Paths.get("src", "test", "resources", "response.json").toString();

File responseTemplateFile = Path.of(responseJsonPath).toFile();

TextToSpeechResponseModel expected =

mapper.readValue(responseTemplateFile, TextToSpeechResponseModel.class);

Assertions.assertEquals(expected, responseModel);

}

private TextToSpeechRequestModel retrieveRequestModel() throws Exception {

TextToSpeechRequestModel model = getRequestModelFromJsonFile();

Assertions.assertNotNull(model);

return model;

}

private TextToSpeechRequestModel getRequestModelFromJsonFile() throws IOException {

String jsonInputPath =

Paths.get("src", "test", "resources", "request.json").toString();

File inputFile = Path.of(jsonInputPath).toFile();

return mapper.readValue(inputFile, TextToSpeechRequestModel.class);

}

private void validateResponseModel(TextToSpeechResponseModel responseModel) {

Assertions.assertNotNull(responseModel);

Assertions.assertEquals(responseModel.getStatusCode(), HttpStatus.CREATED.value());

Assertions.assertEquals(responseModel.getResponseMessage(),

String.format("File %s created", "/tmp/output.mp3"));

}

}

The Controller test has just one test (more can be added if you want a little challenge). The test uses Jackson to create a Request Model from a sample json file (stored under src/test/resources), it then invokes the Service method to convert text to audio, passing the Request Model as argument, and it receives a Response Model. Then, still using Jackson, it converts the expected JSON payload into a Response Model and it verifies that it’s equal (in Java terms) to the Response Model returned by the Service.

The TextToSpeechController implementation

The Controller is implemented as a Spring Boot @RestController. The difference between a @Controller and a @RestController is explained very simply in this article. In a nutshell, @RestController assumes that responses are in JSON, removing the need to prefixing every return type with @ResponseBody.

package io.techwings.media.texttospeech.view;

import com.google.protobuf.ByteString;

import io.techwings.media.texttospeech.app.model.TextToSpeechRequestModel;

import io.techwings.media.texttospeech.app.model.TextToSpeechResponseModel;

import io.techwings.media.texttospeech.app.services.TextToSpeechService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.http.HttpStatus;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class TextToSpeechController {

@Autowired

private TextToSpeechService textToSpeechService;

@PostMapping("/textToAudio")

public TextToSpeechResponseModel convertTextToAudio(@RequestBody TextToSpeechRequestModel model)

throws Exception {

ByteString audioResponse = textToSpeechService.synthesizeText(model);

textToSpeechService.writeOutputAudioFile(audioResponse, model.getOutputPath());

TextToSpeechResponseModel responseModel = new TextToSpeechResponseModel();

responseModel.setStatusCode(HttpStatus.CREATED.value());

responseModel.setResponseMessage(String.format("File %s created", model.getOutputPath()));

return responseModel;

}

}

The Controller exposes a POST request at the address <server>:<port>/textToAudio. The request accepts the following JSON payload (that thanks to the Jackson annotations, is then translated into a TextToSpeechRequestModel).

{

"input": "text-to-convert",

"output_path": "full-path-where-to-save-the-file-to"

}

It then invokes the Service method to convert the text to audio, the method to store the content to the output path and it fills a TextToSpeechResponseModel which, when returned to this Controller’s consumer, Jackson will transform into a JSON payload with the following structure:

{"status_code":201,"response_message":"File 'full-path-to-file' created"}

The response follows the expected return status for a POST request where a resource was created, with a descriptive message. This Controller can be easily tested via Postman.

CI/CD with GitHub Actions

The Google Application Default Credentials (ADC) works well from a local desktop but it won’t fly in a DevOps pipeline running on a Third Party, like GitHub Actions. The reason is that there is no way to authenticate via a browser from a GitHub Action running on the GitHub servers.

The solution to this problem is to use Google Identity Federation. You should already have created an Identity Federation as a service account as per the instructions at the beginning of this article. Here we will be looking at the GitHub action file.

# This workflow will do a clean installation of node dependencies, cache/restore them, build the source code and run tests across different versions of node

# For more information see: https://docs.github.com/en/actions/automating-builds-and-tests/building-and-testing-nodejs

name: Node.js CI

on:

push:

branches: [ "main" ]

pull_request:

branches: [ "main" ]

jobs:

build:

permissions:

contents: 'read'

id-token: 'write'

runs-on: ubuntu-latest

steps:

- uses: 'actions/checkout@v3'

- uses: 'google-github-actions/auth@v1'

with:

workload_identity_provider: 'projects/$PROJECT_NUMBER/locations/global/workloadIdentityPools/$IDENTITY_FEDERATION_ID/providers/github'

service_account: 'your-service-account@$PROJECT_ID.iam.gserviceaccount.com'

- name: 'Set up Cloud SDK'

uses: 'google-github-actions/setup-gcloud@v1'

- name: Set up JDK 17

uses: actions/setup-java@v3

with:

java-version: '17'

distribution: 'temurin'

cache: 'maven'

- name: Build with Maven

run: mvn clean test

This file is located under $project_root/.github/workflows. The most important part in this file is the ‘google-github-actions/auth@v1’ part. This is where GitHub will pass an identity token generated thanks to the ‘permissions’ section in this file and Google will verify that this user/repository has been setup to allow GitHub to impersonate the service account you have setup in your project. This removes the need to store a key credentials file as secret on GitHub tightening the security. Also because this token’s validity is just one hour, whereas if your JSON key gets compromised that is forever. When GitHub Actions are enabled for your project, now every time you push on main (you can change this at the beginning of the file above) the action runs, it authenticates with GitHub and when the integration tests run, as they call the Service which interacts with the Google libraries, they will be automatically authenticated.

Testing the Microservice

You can test the Microservice in three ways:

- From your local PC, once you have setup Google Application Default Configuration, just run the command: mvn clean test

- With Postman. Create a POST request pointing at http://localhost:8080/textToAudio and passing in the body a JSON payload containing the input text and desired output location

- With GitHub Actions once you push your code.